This is to specify the path for our hadoop folder. Similarly, we have to set HADOOP_PREFIX path in hadoop-env.sh.

bashrc Step II: Hadoop Configuration Adding HADOOP_PREFIX bashrcĮxport PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin sudo mv hadoop-2.7.3 /usr/local/hadoop Step I: Setting up Hadoop So after extracting the hadoop jar, run the below command to copy it to “ /usr/local/hadoop “. I installing it in “ /usr/local/hadoop “ .

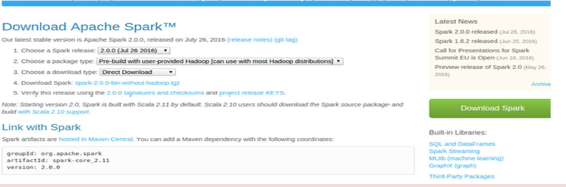

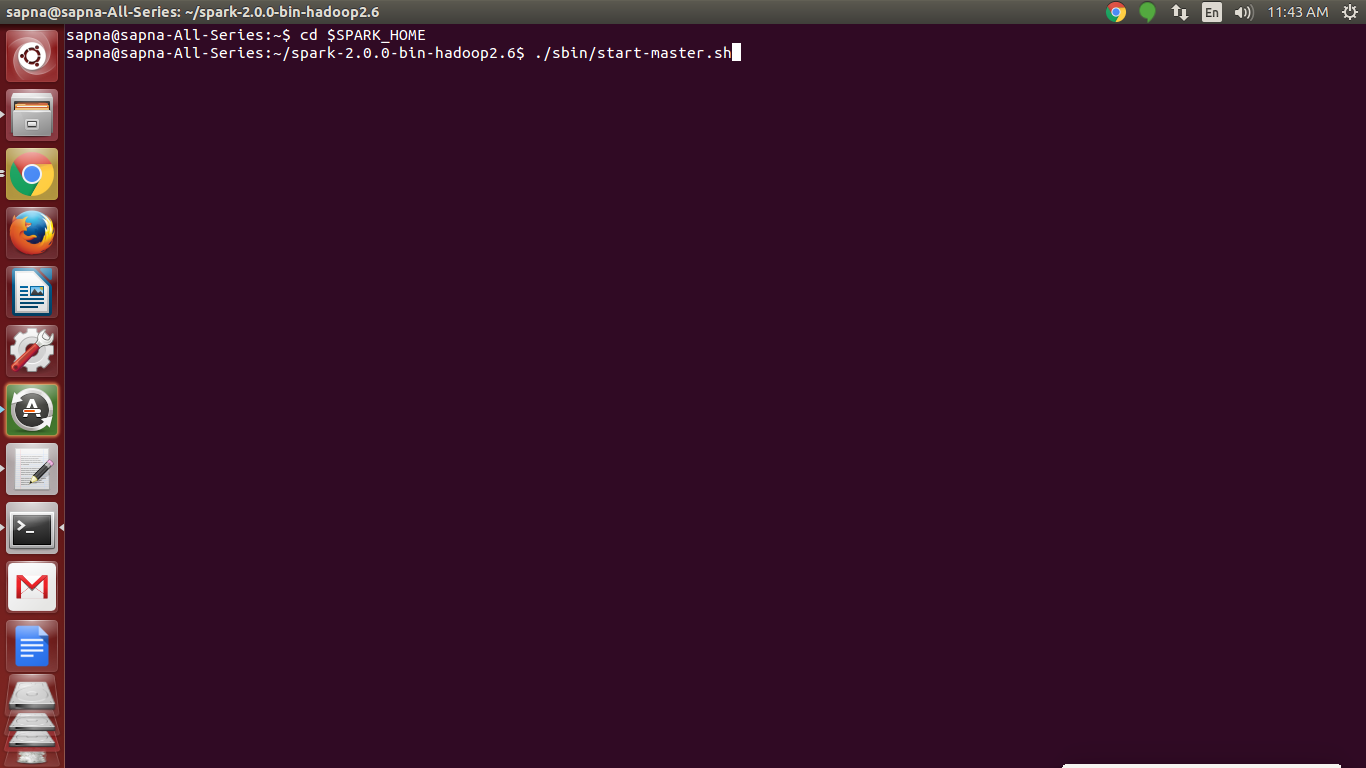

INSTALL APACHE SPARK UBUNTU 16.04 INSTALL

After downloading, extract it to the folder where you want to install hadoop. Installing Hadoop in Pseudo Distributed Modeĭownload hadoop-2.7.3 from this link. If it asks for password, then you have to repeat the above ssh configuration steps. It must login without asking any password. cat ~/.ssh/id_rsa.pub > ~/.ssh/authorized_keys Now, we have to add that key to authorized keys using the following command. Next, open a terminal and run the following command. First, install ssh by running the command sudo apt install ssh Configuring sshįor installing hadoop, we have to configure ssh. If everything is correct, it will display the JAVA_HOME location. Verify JAVA_HOME by running the command echo $JAVA_HOME bashrc file using the command sudo gedit ~/.bashrcĪnd add the above two lines here also.

INSTALL APACHE SPARK UBUNTU 16.04 UPDATE

Now, to apply the changes, we have to update /etc/environment. JAVA_HOME="/usr/lib/jvm/java-8-openjdk-amd64" To check your jdk name run cd /usr/lib/jvm/ Open /etc/environment file using any editor like gedit or nano using the command sudo gedit /etc/environmentĪnd add the following lines (your jdk name may differ. If java is not installed, then install java using the command sudo apt install openjdk-8-jdk-headless Setting JAVA_HOME path It will display the java installation details. Installing javaĬheck the java installation in your system by typing ” java -version ” in a terminal. It is part of the Apache project sponsored by the Apache Software Foundation. Hadoop is an open source, Java-based programming framework that supports the processing and storage of extremely large data sets in a distributed computing environment.

0 kommentar(er)

0 kommentar(er)